When I first encountered the Dirac equation in a particle physics course few months ago I decided that I needed to delve more deeply into the mathematical areas which lie at its core and surrounding which means a return to algebra, group theory and to some extent topology. The physical principles behind many of the equations and reasoning in the genuine physics literature is fairly sound but I can’t help feeling as if I’m standing at the bottom of the valley , unable to decide the shape of the world outside it.

I’ve picked up some books which I decided would at least be interesting regardless of whether they end up helping me resolve the questions that arose with the intent of reading them over the summer. One of them was Simon L. Altmanns in his book Rotations, Quaternions, and Double Groups and it is not often that I find a book where the foreword and introduction acts as more than simple filler. The content in this case was a historical exposé on two men and their work. Willian Rowan Hamilton, the father of quaternions, and another mathematician called Olinde Rodrigues. Now who is Rodrigues I asked? I know of only one formula which bears such a name and he was indeed the first to in 1816 give the so called Rodrigues formula for the Legendre polynomials, known to every physics student who ever wanted to solve the Schrödinger equation of hydrogen or any other partial differential equation with a spherical symmetry.

Apparently Olinde Rodrigues, who lived in France all his life, was responsible for many other things as I learned reading more about this man whom I had previously only known as disembodied name attached to a formula. And though I found his story interesting it was compounded by the fact that I time and time again reading an online article on him would find Altmann to be attached to it in some way, chronicling his life and adding new details upon each retelling. I find some joy in the thought that every man, in a way, has another dedicated to his memory. Now if this dedication is just I will have to decide upon actually reading what came of his work directly but apparently besides working in Legendre polynomials he also did contributions to combinatorics, and odd essay on continued fractions, and banking which was his actual profession (though he was a trained mathematician). Altmanns primary interest however is in Rodrigues work on rotations (the rotation group) and the connection to quaternions and algebra and physics.

One of the simpler questions for example (and I’m being ironic about that) being how to construct from two subsequent rotations a single rotation which acts in the same way and furthermore how to describe a general rotation in a transparent and efficient way. One alternative is Euler angles which I remember vaguely from my course in rigid mechanics and Rodrigues provided his take on how they could be represented with his own parameters and representations.

The question of how to compose two rotations formally is by the way fundamental to understanding how a rigid body such as a sphere can move though space. A rather simple construction shows that any no matter how a rigid body has been moved about its center of mass it can be returned to its original configuration with two simple rotations.

For this we can use the analogy of a solid sphere with a thin transparent shell encapsulating it and on which we consider two pairs of points which are initially superimposed. A and B are two points on the inner sphere and A’ and B’ are two points on the shell which and are initially superimposed on top of the two points on the inner sphere. Then the outer shell is moved around in some way and the claim is now that the two points A’ and B’ can again be superimposed on the A and B points by two simple rotations. The first rotation will return A’ to A and is done by rotating the outer shell around the normal to the plane AOA’ where O is the common center of the sphere and the shell. Now keeping A’ fixed we rotate the shell around the axis formed by OA (or OA’) which superimposes B’ onto B (which can be done since AB is the same as A’B’). And we’re back where we started.

Furthermore two rotations composed are equivalent to a single rotation, a fact which to our modern sensibilities could be proved with matrix arguments. But the question still remains how to arrive at the two to the third in a good way and what the algebra associated with such a composition is.

Of course the happy accident is that studying these fairly concrete things actually gives us insight into quantum field theory but I’m not going to complain.

Now back to Rodrigues. What makes him interesting besides proximity to interesting things are some of the circumstances of his life. For one he was of Jewish decent living in the early 19th century, a time where some of the restrictions associated with that people in terms of educational opportunity was being lifted or at least relaxed pawing way for the unarguable contributions of Jewish mathematicians. But still this racial issue was not yet resolved and may have influenced his choice to favor banking over academics. Too me however I am most entertained in seeing him follow in that proud tradition of french mathematicians and scientist of getting embroiled in some rather non-mathematical enterprises.

This seems almost an condition of the french around this time in history and Rodrigues was no different. Of course this is at heart a consequence of the fact that political and french might be argued to have been inseparable terms from the revolution of 1789 and close a century after it. Though its arguably stretching things a bit.

Nevertheless we may often find a political connection. I am reminded of how Fourier acted as prefect to Napoleon and was supposedly rather wacky in his final years. Evariste Galois was a vocal opponent of Charles X ambitions for an absolute monarchy and was arrested after allegedly making a toast to his demise. Binet on the other hand was perhaps too supporting of Charles X and had to resign his position in 1830 when the latter was deposed. The same event which also drove Cauchy out of France until 1838.

Olinde Rodrigues on the other hand had his political work connected not to the ruling forces but instead to an obscure political and religious movement called Saint-Simonism originally headed by — you guessed it — the then compte of Saint-Simon. (Claude Henri de Rouvroy), usually simply reffered to as Saint-Simon. His ideas have their place in the 19th century development of utopian socialist ideas. However, as with so many thinkers, he drove himself close to financial ruin and depressed an disappointed attempted to take his life in 1823.

He then placed his watch on his desk and wrote his last thoughts until the allotted

time arrived. His aim, alas, was so poor that of the seven shots to his head only

one penetrated his cranium, causing him to lose one eye but not his life (Frank Manuel through Altmann)

Poor Saint-Simon but had he not lived our narrative of Rodrigues would have become ever so much more dull as Olinde Rodrigues , in the process of supporting Saint-Simons recovery and writing the followings years, grew to regard his work a as prophetic and immensely important. Though my interest was originally in Rodrigues I took the time to read one of Saint-Simons last works New Christianity (original french) which exemplifies the religious character of his later writings and which I would summarize as emphasizing the social agenda of Christianity in working to improve the condition of the poorer classes.

After Saint-Simons death in 1825 Rodrigues along with others formed what was essentially a sect devoted to Saint-Simonism holding rites where he and others would be refered to as Père by brothers of the movement. It had its ups and downs as I interprete it, culminating in a more charismatic follower Enfantin taking charge of it, turning it towards sexuality and the morality of incest and adultery (because of course that’s what was going to happen). Rodrigues eventually broke with the movement which died shortly afterwards but its an entertaining episode.

Some of the texts by Altmann I read on the subject of Olinde Rodrigues were besides the aforementioned foreword this shorter article along with a longer preview chapter of an AMS book. And furthermore yet another Altmann article focusing more on the history of quaterions.

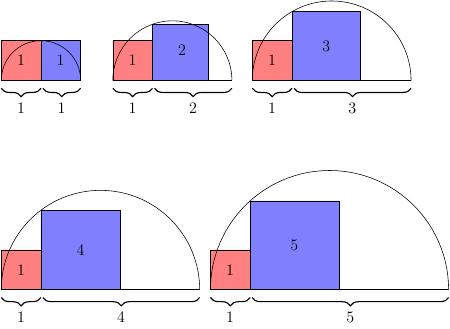

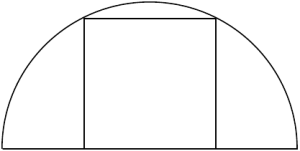

is the side of the square then from the construction we know that

and dammit it’s the equation for the golden ratio

. Explicitely